-

Future Learners

- Future Learners Overview

- Certification Program Overview

- Admission Requirements

- Apply for Admission to CPAWSB

- Indigenous Learners in Accounting

-

Financing Your Education

Financing Your Education

-

Academic Prerequisites Guide

Academic Prerequisites Guide

- Talk to an Admission Advisor

- Programs for CPA Members

-

Current Learners

- Current Learners Overview

- CPA Preparatory Courses

- CPA PEP

-

Post-Designation Education

Post-Designation Education

- Indigenous Learners in Accounting

-

Learner Support

Learner Support

- Administration

- Learner Initiatives

- Financing Your Education

- Learning Standards

- Learning Partners

-

Contractors

-

News

- News Overview

-

Latest News & Blog Posts

Latest News & Blog Posts

-

CPA Preparatory CoursesCPA preparatory courses important updates for the 2025/2026 Academic YearBy CPAWSBFeb 24, 2025

-

CPAWSBAnnouncing the Academic Integrity and Plagiarism Tutorial and Quiz (D2L)By CPAWSBFeb 14, 2025

CPAWSBAnnouncing the Academic Integrity and Plagiarism Tutorial and Quiz (D2L)By CPAWSBFeb 14, 2025 -

Ask CPAWSBI want to become a CPA. How do I get started?By CPAWSBFeb 10, 2025

-

Equity Diversity & InclusionCPAWSB Introduces Equity, Diversity, and Inclusion (EDI) Resources for Learners and ContractorsBy CPAWSBFeb 3, 2025

-

- Candidate Journey E-book 2023

-

Portals

- Portals

-

My CPA Portal

My CPA Portal

-

My CPA Portal for admission application, transcript assessment, program admission, course and module registration, address and employment changes.Learn More

-

-

Desire2Learn (D2L)

Desire2Learn (D2L)

-

Desire2Learn (D2L) portal for course and module online learning environment.Learn More

-

-

National Candidate Portal

National Candidate Portal

-

National Candidate Portal for detailed module results.Learn More

-

-

Contractor Portal

Contractor Portal

-

Discover what opportunities we have and how rewarding it is to give back to your profession.Learn More

-

-

Employer Portal

Employer Portal

-

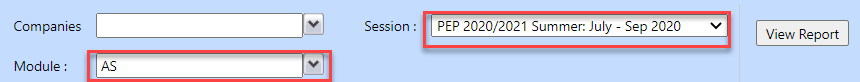

Run reports about CPA PEP candidates employed in your organization.Learn More

-

.png)